Auto-tools/Projects/Stockwell/backfill-retrigger: Difference between revisions

(→how many retriggers: - first image) |

|||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= finding bugs to work on = | = finding bugs to work on = | ||

We have a [https://charts.mozilla.org/ | We have a [https://charts.mozilla.org/FreshOranges/index.html fresh oranges dashboard] which looks like neglected oranges, except that it shows new failures that are high frequency and ignoring [stockwell infra] bugs. | ||

As these are new bugs, there will be issues here that are infra or harness related | As these are new bugs, there will be issues here that are infra or harness related. Use this as an opportunity to annotate [stockwell infra] if it is build, taskcluster, network, machine related. Otherwise, rules are similar to disable-recommended: If a test case is in the bugzilla summary, we should be able to retrigger and find the patch which caused this to become so frequent. | ||

'''Skip test-verify bugs''': test-verify already repeats tests, and only runs tests which were modified on a push. There is no need to retrigger or backfill a test-verify failure. | |||

= choosing a config to test = | = choosing a config to test = | ||

| Line 8: | Line 10: | ||

If there is not a clear winner, then consider a few factors which could help: | If there is not a clear winner, then consider a few factors which could help: | ||

* debug typically provides more data, but takes longer | * debug typically provides more data than opt, but takes longer | ||

* pgo is harder to backfill and builds take longer | * pgo is harder to backfill and builds take longer: try to avoid this | ||

* ccov/jsdcov builds/tests are only run on mozilla-central | * ccov/jsdcov builds/tests are only run on mozilla-central: avoid these configs | ||

* nightly is only run on mozilla-central | * nightly is only run on mozilla-central: avoid these configs | ||

* mac osx has a limited device pool | * mac osx has a limited device pool: try to pick linux or windows | ||

= choosing a starting point = | = choosing a starting point = | ||

| Line 64: | Line 66: | ||

= what to do with the data = | = what to do with the data = | ||

Once you have retriggered/backfilled a job, now you wait for it to finish. opt tests usually finish in <30 minutes once they start running- debug can be up to 1 hour. | |||

When your initial tests finish, you might see a view like this: | |||

[[File:TH_repeat.jpg|500px]] | |||

Here you can see the 2-4 oranges per push. Check each failure to make sure the same test is failing. In the above case that is true and we need to go further back in history. | |||

After repeating the process a few times, the root cause will become visible: | |||

[[File:TH_rootcause.jpg|500px]] | |||

You can see that we switched from bc1 -> bc2 as the failing test, so now the filter is on bc instead of bc1. you can see a clear pattern of failures for every push and then almost no failures before the offending patch landed. | |||

= exceptions and odd things = | = exceptions and odd things = | ||

some common exceptions to watch out for: | some common exceptions to watch out for: | ||

| Line 74: | Line 87: | ||

* root cause looks like a merge, repeat on the other integration branch | * root cause looks like a merge, repeat on the other integration branch | ||

* rarely but sometimes failures occur on mozilla-central landings, or as a result of code merging | * rarely but sometimes failures occur on mozilla-central landings, or as a result of code merging | ||

* sometimes it is obvious from check-in messages (or TV failures) that the failing test case was modified on a certain push: If the test was modified around the time it started failing, that's suspicious and can be used as a short-cut to find the regressing changeset. | |||

Latest revision as of 15:42, 29 March 2018

finding bugs to work on

We have a fresh oranges dashboard which looks like neglected oranges, except that it shows new failures that are high frequency and ignoring [stockwell infra] bugs.

As these are new bugs, there will be issues here that are infra or harness related. Use this as an opportunity to annotate [stockwell infra] if it is build, taskcluster, network, machine related. Otherwise, rules are similar to disable-recommended: If a test case is in the bugzilla summary, we should be able to retrigger and find the patch which caused this to become so frequent.

Skip test-verify bugs: test-verify already repeats tests, and only runs tests which were modified on a push. There is no need to retrigger or backfill a test-verify failure.

choosing a config to test

It is best to look at the existing pattern of data you see when looking at all the starred instances. Typically when adding a comment to a bug while triaging it is normal to list the configurations that the failures are most frequent on. Usually pick the most frequency configuration, maybe if it is a tie for 2 choose both of those.

If there is not a clear winner, then consider a few factors which could help:

- debug typically provides more data than opt, but takes longer

- pgo is harder to backfill and builds take longer: try to avoid this

- ccov/jsdcov builds/tests are only run on mozilla-central: avoid these configs

- nightly is only run on mozilla-central: avoid these configs

- mac osx has a limited device pool: try to pick linux or windows

choosing a starting point

Ideally you want to pick the first instance of a failure and work backwards in time to find the root cause. In practice this can be confusing as we have multiple branches or sometimes different configs that fail at different times.

I would look at the first 10 failures and weigh:

- what branch is most common

- where do the timestamps end up close to each other

- is the most common config on the same branch and with close timestamps

In many cases you will pick a different failure as the first point- I often like to pick the second instance of the branch/config so I can confirm multiple revisions show the failure (show a pattern).

BEWARE - in many cases the first failure posted is not the earliest revision. Timestamps in orangefactor are based on when the job was completed, not when the revision was pushed.

The above example shows that windows 7 opt/pgo is common- I am picking win7-pgo on mozilla-inbound as it is where the pattern seems to be the most frequent.

how to find which job to retrigger

Once you have the revision and config, now we need to figure out the job. Typically a test will fail in a specific job name which often is a chunk number. We have thousands of tests and run split across many jobs in chunks. These are dynamically balanced, which means that if a test is added or removed as part of a commit, the chunks will most likely rebalance and tests are often run in different chunks.

Picking the first job is easy- that is usually very obvious when choosing the config that you are running against and pulling up the revision to start with. for example, it might be linux64/debug mochitest-browser-chrome-e10s-3.

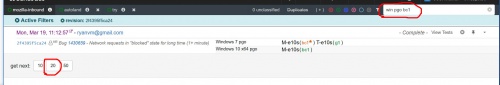

Note in the above picture we filter on |win pgo bc1| and then we need to click the '20' link for 20 more revisions

Note in the above picture we have bc1 available to retrigger on many revisions, you can see the specific error highlighted in the preview pane, and I have circled the 'retrigger' button

As a sanity check, I pull up the log file and search for the test name, it should show up as TEST-START, and then shortly after TEST-UNEXPECTED-FAIL.

When retriggering on previous revisions, you need to repeat this process to ensure that if you select chunk 3 that the test exists, it is likely that the test could be in a different chunk number.

how many retriggers

There is not an exact science here, but I typically choose 40 as my target. This allows us to easily see a pattern and find a percentage of pass/fail jobs per revision. If a test is failing 10% of the time, we would need 10 data points to see 1 failure. Imagine if we had 18 green tests and 2 failed tests, it is often wise to get at least 20 data points. I choose 40 because then in the case of 10% failure rate we have more evidence to show 10% failure rate.

If we look at the data from orangefactor it cannot tell us a real failure rate. The data we have will say X failures in Y pushes (30 failures in 150 pushes). While that looks like a 20% failure rate, it can be misleading for a few reasons:

- we do not run every job/chunk on every push, so it could be 30 failures in 75 data points

- there could be retriggers on the existing data and we could have 3 or 4 failures on a few pushes making it failing less than 20%

The above shows 20 retriggers (21 data points each) for the bc1 job. 40 would give us a clear pattern, but I wanted to save a few resources and make sure 20 retriggers would show an error and possibly a closer range.

backfilling

In the case where we run each job every 5th push or as needed, we will have a larger range of commits from passing to failing. In this case we need to backfill.

Here we have to backfill first, then once the job is scheduled we can retrigger it. Caution here is that if a test is in a chunked job, you might need to backfill all the jobs- a trick is to see if it is in chunk X before/after the backfill, the chance of it being in the same chunk in the backfill range is very high.

Also when backfilling if a build fails, then you will not be able to pinpoint the specific commit. This is the same when people land multiple patches/bugs in the same commit- we just have less granularity.

what to do with the data

Once you have retriggered/backfilled a job, now you wait for it to finish. opt tests usually finish in <30 minutes once they start running- debug can be up to 1 hour.

When your initial tests finish, you might see a view like this:

Here you can see the 2-4 oranges per push. Check each failure to make sure the same test is failing. In the above case that is true and we need to go further back in history.

After repeating the process a few times, the root cause will become visible:

You can see that we switched from bc1 -> bc2 as the failing test, so now the filter is on bc instead of bc1. you can see a clear pattern of failures for every push and then almost no failures before the offending patch landed.

exceptions and odd things

some common exceptions to watch out for:

- tests moving between chunks

- tests added or removed

- infrastructure issues

- time dependent failures (failed a lot, but passed on retriggers)

- unable to backfill due to broken builds/tests

- other tests will fail in the chunk, look at each failure to ensure it is the right failure

- root cause looks like a merge, repeat on the other integration branch

- rarely but sometimes failures occur on mozilla-central landings, or as a result of code merging

- sometimes it is obvious from check-in messages (or TV failures) that the failing test case was modified on a certain push: If the test was modified around the time it started failing, that's suspicious and can be used as a short-cut to find the regressing changeset.