Firefox OS/Vaani/Custom Command: Difference between revisions

No edit summary |

|||

| Line 99: | Line 99: | ||

public <open> = open `app names...` | public <open> = open `app names...` | ||

DialAction can be mapped into a concrete grammar. Since the app name may have pronounceable name, grammar of OpenAppAction will be defined as built-in apps. | DialAction can be mapped into a concrete grammar. Since the app name may have pronounceable name {{bug|1180113}}, grammar of OpenAppAction will be defined as built-in apps. | ||

The OpenAppAction can be translated into a generic OpenAction with SoftwareApplication object. So, we change the definition of OpenAppAction to OpenAction with a object field whose value is SoftwareApplication object, we may find it below. | The OpenAppAction can be translated into a generic OpenAction with SoftwareApplication object. So, we change the definition of OpenAppAction to OpenAction with a object field whose value is SoftwareApplication object, we may find it below. | ||

Revision as of 07:27, 13 July 2015

What is Custom Command?

Custom Command is a feature to enable 3rd party apps to register their voice commands. Voice commands will be predefined actions in the short term. We may use Action of schema.org as base and extend it as action list. 3rd party apps should use JSON-LD to state which actions they support. Once the actions detected, Vaani calls the apps to handle that.

Prerequisite

Install Vaani to your Firefox OS device

- Have web speech API valid on your device. (Currently, the API is only valid on B2G nightly build)

- Install Vaani on your device.

- Download Vaani from https://github.com/kdavis-mozilla/fxos-voice-commands

- Connect your device

- Open your firefox browser select Tools -> Web Developer -> Web IDE

- Select Project -> Open Packaged App, choose the folder you place the downloaded app.

- Click on Play icon

Implementation

(TBD)(brief introduction)

Architecture

(TBD)(graph and explain)

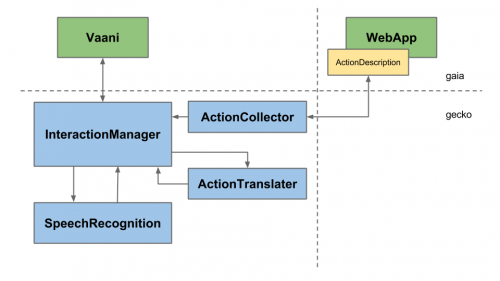

The following picture shows the system architecture :

We will explain this architecture by components.

ActionCollector

ActionCollector will parse the actions from ActionDescriptions and store it. Once it receives the action trigger event, it will forward the action to corresponding web app.

InteractionManager

InteractionManager is the main controller of this system. It listens to the recognition results from SpeechRecognition and ask ActionCollector to trigger related action.

ActionTranslater

When InteractionManager receives the recognition results from SpeechRecognition, what it gets is just the raw sentences. The results have to be translated by ActionTranslater to become real action.

Vaani

Vaani is the UI of this system, it will tell users the state of this system and also user interact with users to make them get access to this system.

Our ultra target is use JSON-LD format to implement actions, this will be mentioned in the following section.

And in current stage, we don't implement ActionTranslater neither, just register predefined grammar to SpeechRecognition.

Data structure

(TBD)(definition and explain)

JSON-LD and manifest.webapp

Since JSON-LD is not implemented, we use manifest.webapp to host the supported actions information. In manifest.webapp, an app should add the following section to have custom command supported:

"custom_commands": [{

"@context": "http://schema.org",

"@type": "WebApplication",

"url": "/calendar/index.html",

"potentialAction": {

"@type": "DialAction",

"target": {

"url": "/dailer/index.html?dail={telephone}"

}

},

"potentialObject": {

"@type": ["Text", "Person"]

}

}]

In this case, we create a dial command whose object is a telephone text or a person. Once a user says this command, the /dailer/index.html?dail={telephone} is called.

Predefined actions

(TBD)

Bug list

Dependencies

- JSON-LD support in Browser API: bug 1178491

Development Plan

- Stage 1 : Single customized command.

- Define data format and supported actions.

- Predefined grammar structure.

- Single command.

- Stage 2 : Compound command

- Compound command.

- Stage 3 : NLP service

- NLP processor to recognition result.

Stage 1

In stage 1, we will implement experimental code at gaia with the following prerequisites:

- We use WebActivity to support UI switching interaction, and use IAC to support background interaction.

- For multiple steps interaction, we use IAC as the base for asking apps to help Vaani to fulfill the missing elements and perform the command.

- We only support predefined actions since we don't have NLP.

Repos

We may find all sources at the following places:

- https://github.com/john-hu/gaia/tree/vaani-test-case (for gaia repo)

- https://github.com/john-hu/vaani/tree/custom-command (for vaani app)

Current status

Only WebActivity is supported.

Actions

The predefined actions are DialAction and OpenAppAction which will be mapped to grammar, like:

# DialAction #JSGF v1.0; grammar fxosVoiceCommands; public <dial> = call (one | two | three | four | five | six | seven | eight | nine | zero)+

# OpenAppAction #JSGF v1.0; grammar fxosVoiceCommands; public <open> = open `app names...`

DialAction can be mapped into a concrete grammar. Since the app name may have pronounceable name bug 1180113, grammar of OpenAppAction will be defined as built-in apps.

The OpenAppAction can be translated into a generic OpenAction with SoftwareApplication object. So, we change the definition of OpenAppAction to OpenAction with a object field whose value is SoftwareApplication object, we may find it below.

Manifest change at dialer/Vaani app

We add the following settings to dialer app:

"custom_commands": [{

"@context": "http://schema.org",

"@type": "DialAction",

"target": {

"@type": "WebActivity",

"name": "dial",

"data": {

"type": "webtelephony/number",

"number": "@number"

}

}

}]

We add the following settings to Vaani app:

"custom_commands": [{

"@context": "http://schema.org",

"@type": "OpenAction",

"object": "SoftwareApplication",

"target": {

"@type": "WebActivity",

"name": "open-app",

"data": {

"type": "webapp",

"manifest": "@manifest",

"entry_point": "@entry_point"

}

}

}]

All variables of DialAction and OpenAction are also predefined. The variable @number will be replaced with the recognized number in this case. The @manifest and @entry_point will be replaced with app's manifest and entrypoint.

Since there is no one handles open-app activity, we also add open-app activity to Vaani app.

Structure of Code

predefined actions (predefined-actions/*.js)

A predefined action is responsible for:

- parse target field of manifest.webapp

- generate grammar

- parse result from transcript

We can find DialAction at predefined-actions/dial-action.js, OpenAppAction at predefined-actions/open-app-action.js.

launchers (launchers/*.js)

A launcher executes the command parsed by Vaani. Currently, we only support WebActivity, launchers/activity-launcher.js. In the future, we will support IAC.

actions parser (store/app-actions.js)

The actions parser parses action definitions from manifest.webapp. It looks up custom_command field from manifest.webapp file. Once found it, it asks launcher to parse it.

core (action/standing-by.js)

We extends the original design of Vaani but changes setupSpeech and _interpreter function at standing-by.js:

- setupSpeech: we use predefined actions to generate grammars.

- _interpreter: we use predefined actions to parse transcript and use launcher to execute command.

FAQ

(TBD)

- JSON-LD is written in javascript scope which means we can only get the information after the App is launched.

- To achieve NLP recognition, SpeechRecognition should support grammar-free recognition.