CodeCoverage/Firefly: Difference between revisions

Mnandigama (talk | contribs) No edit summary |

Mnandigama (talk | contribs) No edit summary |

||

| Line 21: | Line 21: | ||

However, if you are provided an additional data point, like size of the component, you may realize that filling a 40% coverage gap in say '''Content''' gives you a bigger bang for the buck than improving 100% code coverage in '''xpinstall'''. [Side note: This data is generated on Linux so, there would be no coverage for '''xpinstall'''] | However, if you are provided an additional data point, like size of the component, you may realize that filling a 40% coverage gap in say '''Content''' gives you a bigger bang for the buck than improving 100% code coverage in '''xpinstall'''. [Side note: This data is generated on Linux so, there would be no coverage for '''xpinstall'''] | ||

[[Image:Covdatafilesize.PNG|center|Component sizes : Number of files in a component]] | [[Image:Covdatafilesize.PNG|center|Component sizes : Number of files in a component]] | ||

Based on the data from additional data point, you can clearly see that '''content''', '''layout''' code coverage improvements give more bang for the buck. | |||

But which files among those hundreds of files need first attention !! | |||

=== Additional pointers for a better decision analysis === | |||

If, for each file, in a given component, we have additional data pointers like number of bugs fixed for each file, the number of regression bugs fixed, number of security bugs fixed, number of crash bugs fixed, manual code coverage, branch coverage etc., we can stack rank the files in a given component based on any of those points. | |||

== How == | == How == | ||

Revision as of 00:41, 7 August 2009

Introduction

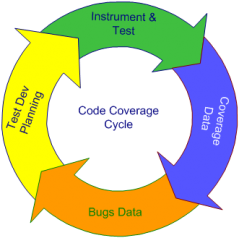

Project Firefly is a Mozilla internal project about using code coverage data, bugs data and code churn data to narrow down to smaller portions of code base to provide high impact test cases for coverage gaps.

The following coverage cycle best describes our tactical approach.

Why

Impact of Code Coverage as sole instrument of decision analysis

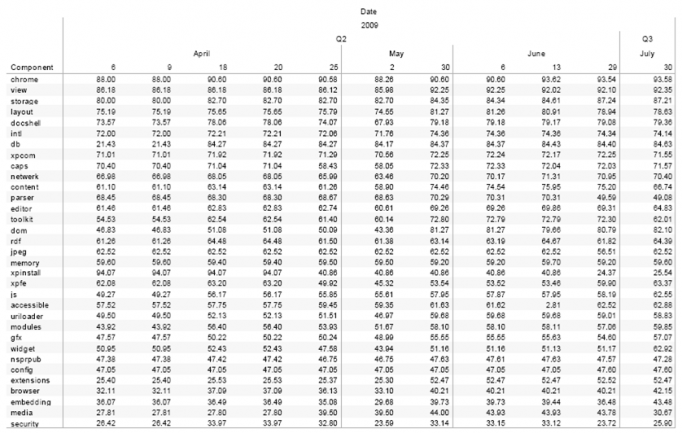

Code coverage data in isolation is a very incomplete metric in itself. Lack of coverage in a given area may indicate a potential risk but having 100% coverage does not indicate that the code is safe and secure. Look at the following example provided below. This is code coverage data grouped by component in the Firefox executable and the data is collected from automated test suites run only. Additionally there are a bunch of manual test suites that provide extra coverage.

Given the above information, one tends to gravitate towards low code coverage components to develop tests to improve product quality.

However, if you are provided an additional data point, like size of the component, you may realize that filling a 40% coverage gap in say Content gives you a bigger bang for the buck than improving 100% code coverage in xpinstall. [Side note: This data is generated on Linux so, there would be no coverage for xpinstall]

Based on the data from additional data point, you can clearly see that content, layout code coverage improvements give more bang for the buck.

But which files among those hundreds of files need first attention !!

Additional pointers for a better decision analysis

If, for each file, in a given component, we have additional data pointers like number of bugs fixed for each file, the number of regression bugs fixed, number of security bugs fixed, number of crash bugs fixed, manual code coverage, branch coverage etc., we can stack rank the files in a given component based on any of those points.

How

What

Where

Enhancement Requests

- Code inlining may show some code portions as not being covered [due to inlining]. Please generate coverage data with code inlining disbaled.

- We want Branch coverage data

- Need to have a side by side view of coverage data and MXR data

- Show the lists of test cases touching the source files.

- Create a testing->codecoverage category in bugzilla to file enhancement requests.

FAQs

Q. Why are you providing line coverage ?

- Line coverage data is the most basic information which helps us to quickly identify the coverage gaps and to come up with suitable test case scenarios.

Q. What about Functional coverage ? Why is functional coverage less than 100% for files that have 100% line coverage ? Look at the following scenario to prove my point!! . There is 100% line coverage but only 49.3% functional coverage.

- As you can see the details from here CLICK, this is an artifact of multi threaded applications running along with runtime generated functions.

- If more than one instance of a function is generated on the stack and if one of them acquires the lock on the thread and the other one goes out of scope before it could acquire a lock on the thread, the loser function will have zero coverage.

- Function coverage is the least reliable of all modes of code coverage. For instance, if there is a function with 1000 lines of code [ this is an exaggeration ] & with an if condition in the first 10 lines which throws the execution pointer out of the function block for not meeting a condition ... any such ejection from the 'if' branch also shows 100% function coverage where as we have barely scratched the surface of that function in reality.

Q. OK smart pants !! what about branch coverage ? Is it not the most meaningful of all modes of coverage ?

- You are right that branch coverage provides true sense of coverage.

- Branch coverage data helps identify integration test gaps, functional test gaps etc.,

- I have prototyped a C/C++ branch coverage strategy using 'zcov'

- I can present the findings from branch coverage to interested parties one on one.

- I do not have plans in place to implement a general availability of 'branch coverage' results in this Quarter.

Q. What is the format in which you are presenting the code coverage results analysis ?

- The data would look like this

- For each file in the Firefox executable, you can see the Automated tests code coverage %, Manual tests code coverage %, Number of fixed Regression bugs, Number of fixed bugs [ as calculated from the change log of source code control system ] and the number of times a file is modified in the HG source control.

- The data can be grouped by Component/Sub component, ordered by Top files w.r.t changes or Top files w.r.t. Regressions or Top files w.r.t general bug fixes etc.,

- Depending on the criteria you can select top 10 or 20 files from any component of you interest and you can check the code coverage details [ line coverage ] from the FOLLOWING LINK.

- Mozilla community can work together to identify suitable scenarios to write test cases to fill the coverage gaps efficiently and effectively.

Q. What components that you recommend for first round of study ?

- Please check the following PDF files

- Component sizes in multiple coverage runs

- Coverage numbers from multiple code cov runs

- Coverage trend graphs by component

- Based on the component size Content,Layout,Intl,xpcom,netwerk,js are the components to study.

- Our goal is to identify 10 files in each of these components to improve code coverage.

Q. You wrote in previous posts that code coverage runs on the instrumented executable do not complete test runs due to hangs and crashes. What exactly is crashing? I don't see any inherent reason why an instrumented build would be "more sensitive to problems", unless the instrumentation itself is buggy !!

- one example: instrumentation changes the timing, which can change thread scheduling, which can expose latent threading bugs.We see it with Valgrind relatively often -- bugs that occur natively but not under Valgrind, or vice versa.

- You can pick up any dynamic instrumentation tool of your choice. You can find more bugs with it as opposed to optimized build.

Q. Ok!! Show me how did you arrive at these conclusions. How can I do it by myself ?

- I would be glad to explain that. Buy me a beer :)