Auto-tools/Projects/Alerts: Difference between revisions

| Line 6: | Line 6: | ||

dzAlerts' objective is to provide high quality, and detailed alerts on performance regressions. | dzAlerts' objective is to provide high quality, and detailed alerts on performance regressions. | ||

Inputs: taken/come from TBD. | |||

Outputs: TBD (emails, other system for manual examination/classification, ...) | |||

== History == | == History == | ||

Revision as of 18:54, 3 July 2014

Datazilla Alerting System

The Datazilla Alerting System, also known as dzAlerts. Is a daemon that periodically inspects the Talos, B2G, and Eideticker performance results and generates alerts when there is a statistically significant regression in performance.

Objective

dzAlerts' objective is to provide high quality, and detailed alerts on performance regressions.

Inputs: taken/come from TBD.

Outputs: TBD (emails, other system for manual examination/classification, ...)

History

dzAlerts started as code inside Datazilla, hence the name. A few design changes were made during development:

- The alerting code was distinct from the Datazilla code in that it was designed as stand alone deamons, with little integration with the main UI-centric code.

- Integrating the alerts data access into the existing Datazilla database access pattern required too much boilerplate code that required additional maintenance as the project evolved.

- The Datazilla database schema was not designed for pulling long time series on individual tests in a performant way.

- The release schedules were different.

dzAlerts is now completely separate, and uses an ElasticSearch cluster to pull slices out of the data cube of test results. It maintains enough information about the alerts so it can provide links to the Datazilla UI.

Nomenclature

The nomenclature used by dzAlerts is a little different than the surrounding applications it deals with. This is to be consistent with the definitions of the same name overall, and to provide namespace for the fine features visible to dzAlerts, but are invisible to other systems.

- Test Suite - or "suite" for short, is a set of bundled tests. All the "tests" referred to by Datazilla and Talos are actually bundles of individual tests. Dromeao_css, kraken, tp5o, and tscrollx are examples of test suites

- Test - a single test responsible for testing a feature. Dromaeo_css has 6 tests called dojo.html, ext.html, jquery.html, mootools.html, prototype.html and yui.html. These can also be called "pages", or "page tests", depending on the suite.

- Replicate - Each test is executed (replicated) multiple times, and the result stored in an array. Each result is called a "replicate", and the array of results is usually referred to as "the replicates".

- Sub-Test - A test should be an atom, but unfortunately some tests bundle yet-deeper-but-unnamed tests into their replicates. These buried tests are called "sub-tests". To handle the sub-tests, the replicates are further ETLed into normal tests for dzAlerts to digest. more below

- Time Series - A statistic from a family of test results which can reasonably be compared to each other, and sorted by time (usually by push date).

- Score - The t-test and median test functions output a p-value. This value is meaningful when it is close to zero, and should be charted on a vertical log-scale. For the sake of charting here we define SCORE = -log10(p-VALUE), which charts nicely alongside the time series, and provides a hint to the number of zeros to the right of the decimal place.

General Design

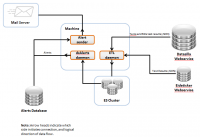

The are two main parts to dzAlerts. The ETL step copies data from Datazilla to the ElasticSearch cluster, and the dzAlerts daemon is responsible for identification of performance regressions, and filling a database with what it finds.

ETL

The Extract Transform Load (ETL) daemon is responsible for

- Extracting the JSON test suite results from Datazilla,

- Transforming the bundle of test results into individual records, and

- Loading them into the ElasticSearch cluster.

The transform step is the most interesting, but still simple: In the case of tp5o, each of the 50 page test results is given a copy of all the tp5o metadata (like platform, branch, test_run_time, etc). This is highly redundant, but allows ElasticSearch to index each test simply for fast retrieval.

Dromeao* ETL

The two dromaeo* suites (dromaeo_css, dromaeo_dom) are a little more complicated, and best demonstrated with couple charts from Datazilla. The top chart shows modify.html test mean and variance. One of the tests is highlighted, and you can see the individual replicates in the bottom chart. Looking at the replicates, we see they are in sets-of-five: Each five replicates has approximately the same value, and each set-of-five seems distinct from the others. Now that you see the pattern in the replicates, you can understand why the variance shown in the above chart is consistently high.

This same pattern happens in all tests of both dromaeo_css and dromaeo_dom.

Pulling Data

Requesting a time time series is done by simply filtering the test results by product, branch, platform and test name. ElasticSearch can pull months of Talos test results for any one combination in under a second. Each test is made of many replicates, and those replicates are reduced to a single statistic and forwarded for analysis. The statistic chosen is usually median; this statistic is more stable in the face of outliers in the replicates, and gives us some protection from bimodal data.

Analysis

Once the test results are retrieved, and sorted by push date, several window functions are run over the data: They calculate the past stats, future stats, t-test pvalue, and median test pvalue, among other things.

Decision of *if* there is a regression is done with the median test. The median test is insensitive to amplitude, which allows it to ignore the intermittent non-Gaussian noise we witness in many if the time series. It is only good at detecting clear discontinuities in the time series, and this is fine because that is what we are looking for. But, it fails at isolating which revision is closest to the discontinuity, and picks any revision belonging to an increasing (or decreasing) slope in the vicinity. Slowly changing statistics are completely invisible to it.

If the median test signals there may be a regression, the t-test is used to determine the specific revision to blame. The t-test's amplitude sensitivity allows us to detect the specific revision closest to the discontinuity. The process is best explained in a chart.

The blue circles are the Eideticker time series, red points are the t-test score, and the green line is the median test score. The Eideticker data is a good example of some of the smallest regressions that can be detected. In this example, the median score threshold is set to 2 (p-value < 0.01), and the t-test score threshold is set to 4 (p-value < 0.0001). First, the median test is used to detect (m-score > 2) three discontinuities: Two regressions and one improvement. Second, all the high t-test scores (red) surrounding the detected regression are considered candidates, and the highest is of those is considered the beginning of the discontinuity.

Future Plans

The use of window functions is flawed:

- There are edge effects, especially noticeable when the far window edge moves over a discontinuity.

- Small window sizes sometimes do not capture enough samples to characterize the variance

- Large window sizes are less sensitive and make short-term regressions invisible.

Significant improvements can be made to the analysis by removing the need to declare a window size:

- Piece-wise linear regression analysis (http://en.wikipedia.org/wiki/Segmented_regression)

- Using a mixture model (http://en.wikipedia.org/wiki/Mixture_model) will help characterize multi-modal data and help us construct a useful aggregate statistic

Alert Management

The Analysis phase is executed over the data multiple times as more test results arrive. This allows the algorithm to fill the future window with test statistics as they become available. The recalculation on increased samples changes the confidence calculations on the alert, and can result in the alert being obsoleted, or even re-instated. For this reason, and others, the alert is recorded in a database along with its latest statistics and status ('new' or 'obsolete'). Of course, there number of times the recalculation is done is limited by the future window size (default 20 on Talos).

Collaboration

Features and development are ongoing

- Signal from Noise meeting (notes on etherpad)

- IRC: #ateam@irc.mozilla.org

- email: klahnakoski@mozilla.com

- Dependencies for dzAlerts meta bug 962378 on bugzilla.