TestEngineering/Performance/FAQ

Sheriffing

Tree

Branch names and confusion

We have a variety of branches at Mozilla, here are the main ones that we see alerts on:

- Mozilla-Inbound (PGO, Non-PGO)

- Autoland (PGO, Non-PGO)

- Mozilla-Beta (all PGO)

Linux and Windows builds have PGO, OSX does not.

When investigating alerts, always look for the Non-PGO branch first. Usually expect to find changes on Mozilla-Inbound (about 50%) and Autoland (50%).

The volume on the branches is something to be aware of, we have higher volume on Mozilla-Inbound and Autoland, this means that alerts will be generated faster and it will be easier to track down the offending revision.

A final note, Mozilla-Beta is a branch where little development takes place. The volume is really low and alerts come 5 days (or more) later. It is important to address Mozilla-Beta alerts ASAP because that is what we are shipping to customers.

What is coalescing

Coalescing is a term we use for when we schedule jobs to run on a given machine. When the load is high these jobs are placed in a queue and the longer the queue we skip over some of the jobs. This allows us to get results on more recent changesets faster.

This affects talos numbers as we see regressions which show up over >1 changeset that is pushed. We have to manually fill in the coalesced jobs (including builds sometimes) to ensure we have the right changeset to blame for the regression.

Some things to be aware of:

- missing test jobs - This could be as easy as waiting for jobs to finish, or scheduling the missing job assuming it was coalesced, otherwise, it could be a missing build.

- missing builds - we would have to generate builds, which automatically schedules test jobs, sometimes these test jobs are coalesced and not run.

- results might not be possible due to build failures, or test failures

- pgo builds are not coalesced, they just run much less frequently. Most likely a pgo build isn't the root cause

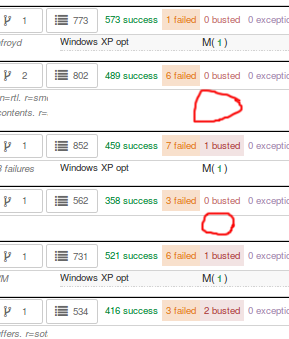

Here is a view on treeherder of missing data (usually coalescing):

Note the two pushes that have no data (circled in red). If the regression happened around here, we might want to backfill those two jobs so we can ensure we are looking at the push which caused the regression instead of >1 push.

What is an uplift

Every 6 weeks we release a new version of Firefox. When we do that, our code which developers check into the nightly branch gets uplifted (thing of this as a large merge) to the Beta branch. Now all the code, features, and Talos regressions are on Beta.

This affects the Performance Sheriffs because we will get a big pile of alerts for Mozilla-Beta. These need to be addressed rapidly. Luckily almost all the regressions seen on Mozilla-Beta will already have been tracked on Mozilla-Inbound or Autoland.

What is a merge

Many times each day we merge code from the integration branches into the main branch and back. This is a common process in large projects. At Mozilla, this means that the majority of the code for Firefox is checked into Mozilla-Inbound and Autoland, then it is merged into Mozilla-Central (also referred to as Firefox) and then once merged, it gets merged back into the other branches. If you want to read more about this merge procedure, here are the details.

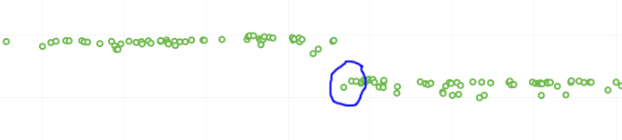

Here is an example of a view of what a merge looks like on TreeHerder:

Note that the topmost revision has the commit messsage of: "merge m-c to m-i". This is pretty standard and you can see that there are a series of changesets, not just a few related patches.

How this affects alerts is that when a regression lands on Mozilla-Inbound, it will be merged into Firefox, then Autoland. Most likely this means that you will see duplicate alerts on the other integration branch.

- note: we do not generate alerts for the Firefox (Mozilla-Central) branch.

What is a backout

Many times we backout or hotfix code as it is causing a build failure or unittest failure. The Sheriff team handles this process in general and backouts/hotfixes are usually done within 3 hours (i.e. we won't have 12 future changesets) of the original fix. As you can imagine we could get an alert 6 hours later and go to look at the graph and see there is no regression, instead there is a temporary spike for a few data points.

While looking on TreeHerder for a backout, they all mention a backout in the commit message:

- note ^ the above image mentions the bug that was backed out, sometimes it is the revisoin

Backouts which affect Perfherder alerts always generate a set of improvements and regressions. These are usually easy to spot on the graph server and we just need to annotate the set of alerts for the given revision to be a 'backout' with the bug to track what took place.

Here is a view on graph server of what appears to be a backout (it could be a fix that landed quickly also):

What is PGO

PGO is Profile Guided Optimization where we do a build, run it to collect metrics and optimize based on the output of the metrics. We only release PGO builds, and for the integration branches we do these periodically (6 hours) or as needed. For Mozilla-Central we follow the same pattern. As the builds take considerably longer (2+ times as long) we don't do this for every commit into our integration branches.

How does this affect alerts? We care most about PGO alerts- that is what we ship! Most of the time an alert will be generated for a -Non-PGO build and then a few hours or a day later we will see alerts for the PGO build.

Pay close attention to the branch the alerts are on, most likely you will see it on the non-pgo branch first (i.e. Mozilla-Inbound-Non-PGO), then roughly a day later you will see a similar alert show up on the PGO branch (i.e. Mozilla-Inbound).

Caveats:

- OSX does not do PGO builds, so we do not have -Non-PGO branches for those platforms. (i.e. we only have Mozilla-Inbound)

- PGO alerts will probably have different regression percentages, but the overall list of platforms/tests for a given revision will be almost identical

Alert

What alerts are displayed in Alert Manager

Perfherder Alerts defaults to multiple types of alerts that are untriaged. It is a goal to keep these lists empty! You can view alerts that are improvements or in any other state (i.e. investigating, fixed, etc.) by using the drop down at the top of the page.

Do we care about all alerts/tests

Yes we do. Some tests are more commonly invalid, mostly due to the noise in the tests. We also adjust the threshold per test, the default is 2%, but for Dromaeo it is 5%. If we consider a test too noisy, we consider removing it entirely.

Here are some platforms/tests which are exceptions about what we run:

- Linux 64bit - the only platform which we run dromaeo_dom

- Linux 32/64bit - the only platform in which no platform_microbench test runs, due to high noise levels

- Windows 7 - the only platform that supports xperf (toolchain is only installed there)

- Windows 7/10 - heavy profiles don't run here, because they take too long while cloning the big profiles; these are tp6 tests that use heavy user profiles

Lastly, we should prioritize alerts on the Mozilla-Beta branch since those are affecting more people.

What does a regression look like on the graph

On almost all of our tests, we are measuring based on time. This means that the lower the score the better. Whenever the graph increases in value that is a regression.

Here is a view of a regression:

We have some tests which measure internal metrics. A few of those are actually reported where a higher score is better. This is confusing, but we refer to these as reverse tests. The list of tests which are reverse are:

- canvasmark

- dromaeo_css

- dromaeo_dom

- rasterflood_gradient

- speedometer

- tcanvasmark

- v8 version 7

Here is a view of a reverse regression:

Why does Alert Manager print -xx%

The alert will either be a regression or an improvement. For the alerts we show by default, it is regressions only. It is important to know the severity of an alert. For example a 3% regression is important to understand, but a 30% regression probably needs to be fixed ASAP. This is annotated as a XX% in the UI. there are no + or - to indicate improvement or regression, this is an absolute number. Use the bar graph to the side to determine which type of alert this is.

NOTE: for the reverse tests we take that into account, so the bar graph will know to look in the correct direction.

Noise

See TestEngineering/Performance/Sheriffing/Noise_FAQ