TestEngineering/Performance/FAQ

Sheriffing

Perfherder

What is Perfherder

Perfherder is a tool that takes data points from log files and graphs them over time. Primarily this is used for performance data from Talos, but also from AWSY, build_metrics, Autophone and platform_microbenchmarks. All these are test harnesses and you can find more about them here.

The code for Perfherder can be found inside Treeherder here.

Viewing details on a graph

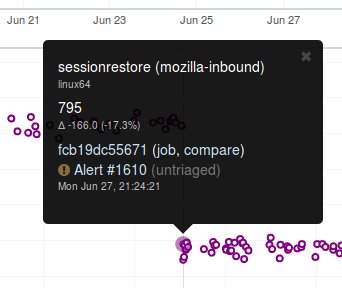

When viewing Perfherder Graph details, in many cases it is obvious where the regression is. If you mouse over the data points (not click on them) you can see some raw data values.

While looking for the specific changeset that caused the regression, you have to determine where the values changed. By moving the mouse over the values you can easily determine the high/low values historically to determine the normal 'range'. When you see values change, it should be obvious that the high/low values have a different 'range'.

If this is hard to see, it helps to zoom in to reduce the 'y' axis. Also zooming into the 'x' axis for a smaller range of revisions yields less data points, but an easier way to see the regression.

Once you find the regression point, you can click on the data point and it will lock the information as a popup. Then you can click on the revision to investigate the raw changes which were part of that.

Note, here you can get the date, revision, and value. These are all useful data points to be aware of while viewing graphs.

Keep in mind, graph server doesn't show if there is missing data or a range of changesets.

Zooming

Perfherder graphs has the ability adjust the date range from a drop down box. We default to 14 days, but we can change it to last day/2/7/14/30/90/365 days from the UI drop down.

It is usually a good idea to zoom out to a 30 day view on integration branches. This allows us to see recent history as well as what the longer term trend is.

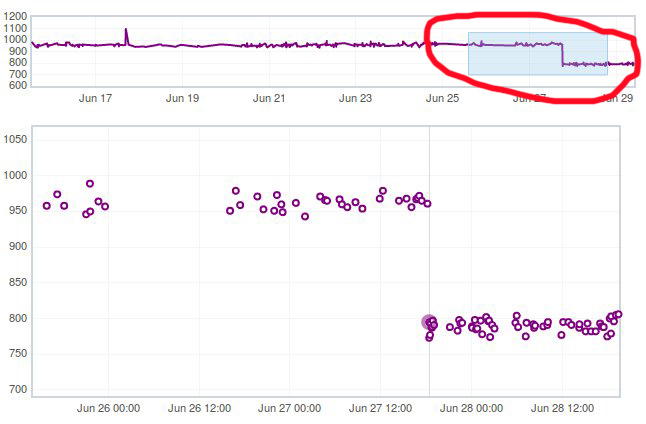

There are two parts in the Perfherder graph, the top box with the trendline and the bottom viewing area with the raw data points. If you select an area in the trendline box, it will zoom to that. This is useful for adjusting the Y-axis.

Here is an example of zooming in on an area:

Adding additional data points

One feature of Perfherder graphs is the ability to add up to 7 sets of data points at once and compare them on the same graph. In fact when clicking on a graph for an alert, we do this automatically when we add multiple branches at once.

While looking at a graph, it is a good idea to look at that test/platform across multiple branches to see where the regression originally started at and to see if it is affected on different branches. There are 3 primary needs for adding data:

- investigating branches

- investigating platforms

- comparing pgo/non pgo/e10s for the same test

For investingating branches click the branch name in the UI and it will pop up the "Add more test data" dialog pre populated with the other branches which has data for this exact platform/test. All you have to do is hit add.

For investigating platforms, click the platform name in the UI and it will pop up the "Add more test data" dialog pre populated with the other platforms which has data for this exact platform/test. All you have to do is hit add.

To do this find the link on the left hand side where the data series are located at "+Add more test data":

Muting additional data points

Once you become familiar with graph server it is a common use case to have multiple data points on the graph at a time. This results in a lot of confusing data points if you are trying to zoom in and investigating the values for a given data point.

Luckily there is a neat feature in graph server that allows you to mute a data point. There is a checkbox (on by default) that lives in the left hand side bar where you have the list of data series.

If you toggle the checkbox off, it will mute (hide) that series.

Common practice is to load up a bunch of related series, and mute/unmute to verify revisions, dates, etc. for a visible regression.

Tree

Branch names and confusion

We have a variety of branches at Mozilla, here are the main ones that we see alerts on:

- Mozilla-Inbound (PGO, Non-PGO)

- Autoland (PGO, Non-PGO)

- Mozilla-Beta (all PGO)

Linux and Windows builds have PGO, OSX does not.

When investigating alerts, always look for the Non-PGO branch first. Usually expect to find changes on Mozilla-Inbound (about 50%) and Autoland (50%).

The volume on the branches is something to be aware of, we have higher volume on Mozilla-Inbound and Autoland, this means that alerts will be generated faster and it will be easier to track down the offending revision.

A final note, Mozilla-Beta is a branch where little development takes place. The volume is really low and alerts come 5 days (or more) later. It is important to address Mozilla-Beta alerts ASAP because that is what we are shipping to customers.

What is coalescing

Coalescing is a term we use for when we schedule jobs to run on a given machine. When the load is high these jobs are placed in a queue and the longer the queue we skip over some of the jobs. This allows us to get results on more recent changesets faster.

This affects talos numbers as we see regressions which show up over >1 changeset that is pushed. We have to manually fill in the coalesced jobs (including builds sometimes) to ensure we have the right changeset to blame for the regression.

Some things to be aware of:

- missing test jobs - This could be as easy as waiting for jobs to finish, or scheduling the missing job assuming it was coalesced, otherwise, it could be a missing build.

- missing builds - we would have to generate builds, which automatically schedules test jobs, sometimes these test jobs are coalesced and not run.

- results might not be possible due to build failures, or test failures

- pgo builds are not coalesced, they just run much less frequently. Most likely a pgo build isn't the root cause

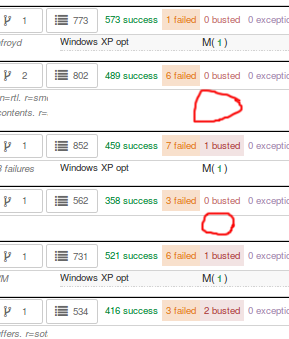

Here is a view on treeherder of missing data (usually coalescing):

Note the two pushes that have no data (circled in red). If the regression happened around here, we might want to backfill those two jobs so we can ensure we are looking at the push which caused the regression instead of >1 push.

What is an uplift

Every 6 weeks we release a new version of Firefox. When we do that, our code which developers check into the nightly branch gets uplifted (thing of this as a large merge) to the Beta branch. Now all the code, features, and Talos regressions are on Beta.

This affects the Performance Sheriffs because we will get a big pile of alerts for Mozilla-Beta. These need to be addressed rapidly. Luckily almost all the regressions seen on Mozilla-Beta will already have been tracked on Mozilla-Inbound or Autoland.

What is a merge

Many times each day we merge code from the integration branches into the main branch and back. This is a common process in large projects. At Mozilla, this means that the majority of the code for Firefox is checked into Mozilla-Inbound and Autoland, then it is merged into Mozilla-Central (also referred to as Firefox) and then once merged, it gets merged back into the other branches. If you want to read more about this merge procedure, here are the details.

Here is an example of a view of what a merge looks like on TreeHerder:

Note that the topmost revision has the commit messsage of: "merge m-c to m-i". This is pretty standard and you can see that there are a series of changesets, not just a few related patches.

How this affects alerts is that when a regression lands on Mozilla-Inbound, it will be merged into Firefox, then Autoland. Most likely this means that you will see duplicate alerts on the other integration branch.

- note: we do not generate alerts for the Firefox (Mozilla-Central) branch.

What is a backout

Many times we backout or hotfix code as it is causing a build failure or unittest failure. The Sheriff team handles this process in general and backouts/hotfixes are usually done within 3 hours (i.e. we won't have 12 future changesets) of the original fix. As you can imagine we could get an alert 6 hours later and go to look at the graph and see there is no regression, instead there is a temporary spike for a few data points.

While looking on TreeHerder for a backout, they all mention a backout in the commit message:

- note ^ the above image mentions the bug that was backed out, sometimes it is the revisoin

Backouts which affect Perfherder alerts always generate a set of improvements and regressions. These are usually easy to spot on the graph server and we just need to annotate the set of alerts for the given revision to be a 'backout' with the bug to track what took place.

Here is a view on graph server of what appears to be a backout (it could be a fix that landed quickly also):

What is PGO

PGO is Profile Guided Optimization where we do a build, run it to collect metrics and optimize based on the output of the metrics. We only release PGO builds, and for the integration branches we do these periodically (6 hours) or as needed. For Mozilla-Central we follow the same pattern. As the builds take considerably longer (2+ times as long) we don't do this for every commit into our integration branches.

How does this affect alerts? We care most about PGO alerts- that is what we ship! Most of the time an alert will be generated for a -Non-PGO build and then a few hours or a day later we will see alerts for the PGO build.

Pay close attention to the branch the alerts are on, most likely you will see it on the non-pgo branch first (i.e. Mozilla-Inbound-Non-PGO), then roughly a day later you will see a similar alert show up on the PGO branch (i.e. Mozilla-Inbound).

Caveats:

- OSX does not do PGO builds, so we do not have -Non-PGO branches for those platforms. (i.e. we only have Mozilla-Inbound)

- PGO alerts will probably have different regression percentages, but the overall list of platforms/tests for a given revision will be almost identical

Alert

What alerts are displayed in Alert Manager

Perfherder Alerts defaults to multiple types of alerts that are untriaged. It is a goal to keep these lists empty! You can view alerts that are improvements or in any other state (i.e. investigating, fixed, etc.) by using the drop down at the top of the page.

Do we care about all alerts/tests

Yes we do. Some tests are more commonly invalid, mostly due to the noise in the tests. We also adjust the threshold per test, the default is 2%, but for Dromaeo it is 5%. If we consider a test too noisy, we consider removing it entirely.

Here are some platforms/tests which are exceptions about what we run:

- Linux 64bit - the only platform which we run dromaeo_dom

- Linux 32/64bit - the only platform in which no platform_microbench test runs, due to high noise levels

- Windows 7 - the only platform that supports xperf (toolchain is only installed there)

- Windows 7/10 - heavy profiles don't run here, because they take too long while cloning the big profiles; these are tp6 tests that use heavy user profiles

Lastly, we should prioritize alerts on the Mozilla-Beta branch since those are affecting more people.

What does a regression look like on the graph

On almost all of our tests, we are measuring based on time. This means that the lower the score the better. Whenever the graph increases in value that is a regression.

Here is a view of a regression:

We have some tests which measure internal metrics. A few of those are actually reported where a higher score is better. This is confusing, but we refer to these as reverse tests. The list of tests which are reverse are:

- canvasmark

- dromaeo_css

- dromaeo_dom

- rasterflood_gradient

- speedometer

- tcanvasmark

- v8 version 7

Here is a view of a reverse regression:

Why does Alert Manager print -xx%

The alert will either be a regression or an improvement. For the alerts we show by default, it is regressions only. It is important to know the severity of an alert. For example a 3% regression is important to understand, but a 30% regression probably needs to be fixed ASAP. This is annotated as a XX% in the UI. there are no + or - to indicate improvement or regression, this is an absolute number. Use the bar graph to the side to determine which type of alert this is.

NOTE: for the reverse tests we take that into account, so the bar graph will know to look in the correct direction.

Noise

What is Noise

Generally a test reports values that are in a range instead of a consistent value. The larger the range of 'normal' results, the more noise we have.

Some tests will post results in a small range, and when we get a data point significantly outside the range, it is easy to identify.

The problem is that many tests have a large range of expected results. It makes it hard to determine what a regression is when we might have a range += 4% from the median and we have a 3% regression. It is obvious in the graph over time, but hard to tell until you have many future data points.

Why can we not trust a single data point

This is a problem we have dealt with for years with no perfect answer. Some reasons we do know are:

- the test is noisy due to timing, diskIO, etc.

- the specific machine might have slight differences

- sometimes we have longer waits starting the browser or a pageload hang for a couple extra seconds

The short answer is we don't know and have to work within the constraints we do know.

Why do we need 12 future data points

We are re-evaluating our assertions here, but the more data points we have, the more confidence we have in the analysis of the raw data to point out a specific change.

This causes problem when we land code on Mozilla-Beta and it takes 10 days to get 12 data points. We sometimes rerun tests and just retriggering a job will help provide more data points to help us generate an alert if needed.

Can't we do smarter analysis to reduce noise

Yes, we can. We have other projects and a masters thesis has been written on this subject. The reality is we will still need future data points to show a trend and depending on the source of data we will need to use different algorithms to analyze it.

Duplicate / new alerts

One problem with coalescing is that we sometimes generate an original alert on a range of changes, then when we fill in the data (backfilling/retriggering) we generate new alerts. This causes confusion while looking at the alerts.

Here are some scenarios which duplication will be seen:

- backfilling data from coalescing, you will see a similar alert on the same branch/platform/test but a different revision

- action: reassign the alerts to the original alert summary so all related alerts are in one place!

- we merge changesets between branches

- action: find the original alert summary on the upstream branch and mark the specific alert as downstream to that alert summary

- pgo builds

- action: reassign these to the non-pgo alert summary (if one exists), or downstream to the correct alert summary if this originally happened on another branch

In Alert Manager it is good to acknowledge the alert and use the reassign or downstream actions. This helps us keep track of alerts across branches whenever we need to investigate in the future.

Weekends

On weekends (Saturday/Sunday) and many holidays, we find that the volume of pushes are much smaller. This results in much fewer tests to be run. For many tests, especially ones that are noisier than others, we find that the few data points we collect on a weekend are much less noisy (either falling to the top or bottom of the noise range).

Here is an example view of data that behaves differently on weekends:

This affects the Talos Sheriff because on Monday when our volume of pushes picks up, we get a larger range of values. Due to the way we calculate a regression, it means that we see a shift in our expected range on Monday. Usually these alerts are generated Monday evening/Tuesday morning. These are typically small regressions (<3%) and on the noisier tests.

Multi Modal

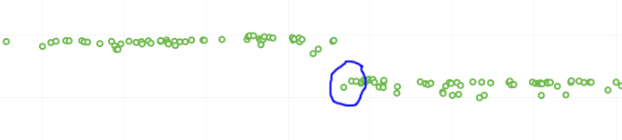

Many tests are bi-modal or multi-modal. This means that they have a consistent set of values, but 2 or 3 of them. Instead of having a bunch of scattered values between the low and high, you will have 2 values, the lower one and the higher one.

Here is an example of a graph that has two sets of values (with random ones scattered in between):

This affects the alerts and results because sometimes we get a series of results that are less modal than the original- of course this generates an alert and a day later you will probably see that we are back to the original x-modal pattern as we see historically. Some of this is affected by the weekends.

Random Noise

Random noise happens all the time. In fact our unittests fail 2-3% of the time with a test or two that randomly fails.

This doesn't affect Talos alerts as much, but keep in mind that if you cannot determine a trend for an alerted regression and have done a lot of retriggers, then it is probably not worth the effort to find the root cause.