Identity/CryptoIdeas/04-Delta-Sync

Delta-Based Data Synchronization

- Brian Warner, Chris Karlof, Feb-2013

Summary: a scheme to efficiently synchronize browser data (bookmarks, passwords, etc) between multiple devices, with end-to-end encryption.

Problem Statement

The goal of PICL is to provide a unified browser experience across multiple devices: all your browsers behave as extensions of the same collective tool. Changes you make on one device should be reflected on the other devices, and any device should be sufficient to give you access to all your data.

This is a specific instance of a data-synchronization problem, not unlike the problem that Git or Dropbox seeks to solve. The data in question contains things like a table of bookmarks, and the different devices are browsers in different places (or other tools which need access to the same data).

There are many aspects to this problem that must be solved, but merging tends to be the most challenging: what to do when multiple devices are changed at the same time. This problem could be eliminated by locking (which is not practical), or minimized by frequent synchronizing (to reduce the opportunities to get out of sync), but when devices can be offline for arbitrary periods of time, the problem cannot be avoided completely. If the user makes change A on one device, and change B on another device, the goal is to provide them with a dataset that looks as if they'd made both changes on the same device. If A and B touch different parts of the data, they should merge without fuss. If they modify the same thing, or A modifies an item that B deletes, then the result should at least be plausible, and should be as predictable and similar to the single-device case as possible.

The collection of data that must be merged depends upon the data type and how it is used. Bookmarks and passwords are conceptually "one big dataset": every device is reading and modifying the same tree or table of data. However "open tabs on other browsers" are currently read-only: each device must publish its open tabs, but merely allows the user to see the tabs from other machines. (In the future we might treat the set of open tabs as a common dataset to keep in sync, so opening a tab on one device would immediately open that same tab on all other devices, but that's not how we use browser today). The merge task is scoped to the collection, and is thus trivial for open-tabs where there is only one writer.

Two other goals are efficiency (transfer and store as little data as possible) and security (expose the user's data to as few machines and parties as possible).

Other constraints may include:

- Partial fetch: on very small devices, it may not be feasible to pull all bookmarks, and perhaps it should be possible to download only the most recently-used subset of them.

- Early access: when pulling a large dataset, it may be nice to provide user access to some frequently-used subset before the entire dataset is available

- Gentle on the server: users with large datasets should not cause database timeouts. Pagination might help, or breaking the dataset into smaller pieces.

Merge Strategies and Locations

We can take a lot of inspiration from Git. In the Git "central canonical repository" approach, "git push" never does any merging (it is only allowed to make fast-forward changes), and "git pull" is really a "git fetch" (which only makes fast-forward changes) plus a "git merge". So each client maintains a shadow copy of the server's current "canonical" version, and merges are performed against that shadow copy. This puts the burden of merge on the client, which must make the decisions before it can update the central repo.

We explore the client-side merge in this document.

An alternative approach would be to make the server retain a shadow copy of each device's state. To push changes, the client first updates the server's shadow (this is always a fast-forward). Then the server performs a merge with the current canonical dataset to produce a new canonical dataset, and sends this proposed version down to all devices. If the first device has made more local changes since updating the shadow, the proposed version is rejected, and the client starts the process again. This puts the burden of the merge on the server.

Git retains version history for the benefit of users, as any good revision-control system should. But it also helps with merging. By comparing the old and new versions of a repository, the tool can deduce the user's intended changes, which can then be applied to a different starting point. Most VCSes treat the revision as the primary state, but some (like Darcs) try to retain the changes themselves, and only build the state when necessary (like doing a checkout).

Git uses hash-based revision identifiers, with zero or more parent pointers, to capture an arbitrary DAG of revisions. This helps manage merging when repositories can be arbitrarily connected in any topology. Our task does not require such a general solution: we can assume a single central repository. So if we use client-side merging, we can use simple integers as revision numbers. (this may turn out to be insufficient, in which case we can fall back to git-style parent-revision-id lists).

Overall Architecture

We assume that each datatype has a canonical "native store", where the existing browser code expects to find it: e.g. the Places database for bookmarks. We expect that it is possible to enumerate the native store and to observe changes, and that our code can make a "write if not modified since" operation. This write operation must be atomic. The idea is that our code gets an event that says the user has changed the dataset (moving it to version X), then our code talks to the server and figures out merging stuff, then tells the native store "if you're still at version X, please make these changes". If the native store has moved on to version Y (because the user made another change), our code will update and merge and try again.

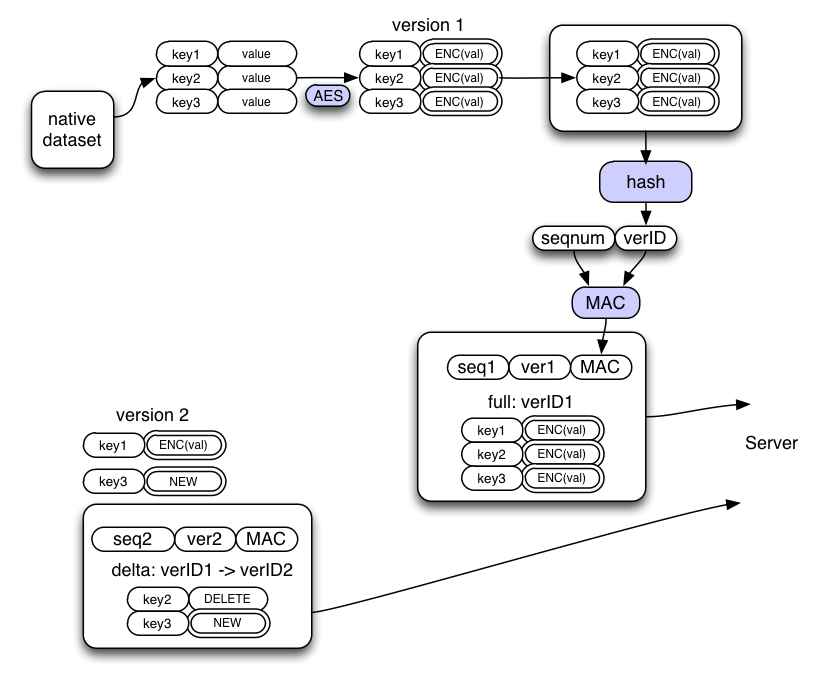

Our code will maintain a synchronizable representation of the native store using key-value pairs ("KVs"). The values in the pairs are then encrypted, and the resulting table of key-encryptedvalue pairs ("KEVs") is called a "revision". By sorting and hashing this table, we get a repeatable strong "version-ID". Clients then sign (or MAC) this version-ID (including a sequence number) to prevent the server from selectively omitting or replaying individual records.

Clients can compute deltas between these KEV sets to efficiently move the server (or another client) from one revision to another. The deltas contain two kinds of instructions: ADD/MODIFY(key, encryptedvalue) and DELETE(key). The deltas themselves are not signed, but the client which receives them can apply them to a copy of the datastore and compare the hash of the result against the signed hash in the version-ID, before accepting the delta for real.

Server Holds Current Version, and Sometimes Deltas

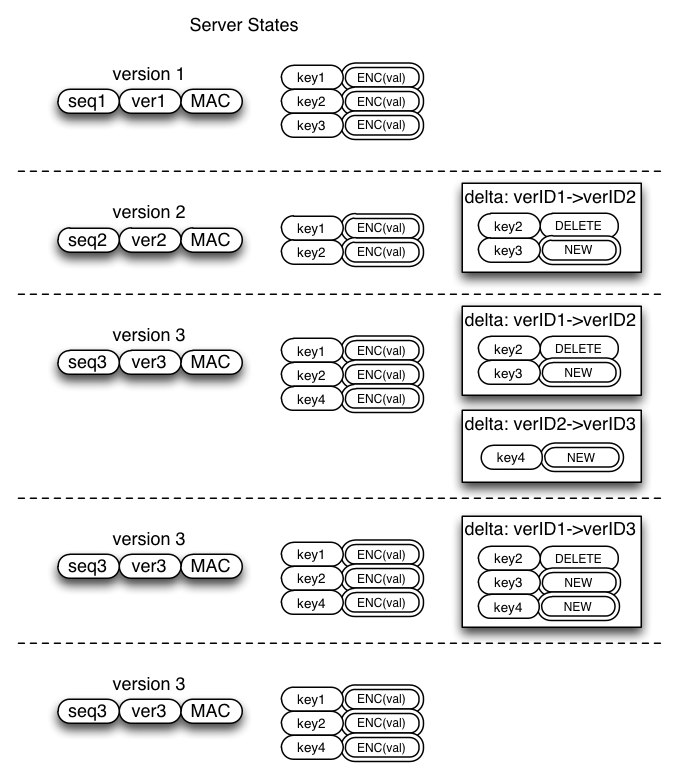

For each collection, the server holds the signed version-ID for the current version of the dataset, and the complete list of (encrypted) KVEs for that version. It may also retain deltas between various historical versions. At any time, the server can merge two deltas (from X->Y and Y->Z into X->Z), or delete deltas that it no longer thinks will be useful. It simply concatenates the ADD/MODIFY/DELETE instructions and discards all but the last instruction for each key.

Clients can always push or fetch the entire KVE set if the receiving side does not have a convenient starting point for a delta, at the expense of bandwidth.

Since the server will generally know about all client devices, it can remember what version is held by each client, and retain a delta from that client's version to the current one, or to some minimal set of intermediate steps. For example, if the server knows of devices that are at version 1, 5, 10, and 12, it could retain the full set of deltas (1->2,2->3,etc,11->12), or the minimal set (1->5,5->10,10->12), or it could decide that the oldest two devices are not worth providing a fast update path and merely retain 10->12. The older devices can still synchronize correctly, but will have to fetch the full KVE list. The newer devices will be able to fetch a small delta.

Client Merging

"Inbound changes" are those initiated by some other device. When our device hears about an inbound change, it must be given the signed version-ID produced by that other device, and enough information to transform its current state to one that matches the inbound version. The server can either provide a delta (or sequence of deltas X->Y->Z), or a full KVE set, whichever is convenient and shortest (for deltas which delete the majority of keys, it may be faster to send the full list even if deltas are available).

When the client learns about

The client starts by checking the signature/MAC on the version-ID, and asserting that it contains a higher sequence number than the client's current version. This prevents the server from forging datasets or replaying older ones (the only remaining mischief is to pretend no new versions have been created). If deltas are used, the client must then copy its KVE set and apply the deltas, then compute the hash and assert that it matches the version-ID.

...